Creating high-quality 3D content has always been a complex and time-consuming task. Whether it’s for games, animation, virtual reality, or product visualization, traditional 3D modeling demands skilled artists, powerful tools, and a lot of patience. But now, things are changing—thanks to Artificial Intelligence.

One of the most exciting developments in this space is Neural Radiance Fields (NeRF). It’s an advanced AI technique that can take regular 2D images and turn them into detailed, photorealistic 3D scenes. With NeRF technology, content creators, animators, developers, and even hobbyists can build realistic 3D environments without manually sculpting every object.

The AR/VR market—one of the biggest beneficiaries of NeRF—is expected to grow from $38.5 billion in 2023 to $150 billion+ by 2030.

Companies like Luma AI, Polycam, and Nerfstudio are rapidly commercializing NeRF-based tools, each reporting millions of scans and uploads across their platforms.

In this blog, we’ll break down what NeRF is, how it works, and how it’s revolutionizing the world of 3D content creation.

What is NeRF (Neural Radiance Fields)?

Neural Radiance Fields, or NeRF, is a type of AI model that reconstructs 3D scenes from 2D images. It was introduced in 2020 and quickly caught the attention of the graphics and AI communities. At its core, NeRF is a neural network trained to predict the color and density of points in 3D space.

Instead of building 3D objects manually using tools like Blender or Maya, NeRF learns to generate realistic 3D representations from a collection of 2D photos taken from different angles. What makes it powerful is its ability to create highly detailed, photorealistic scenes with correct lighting, shadows, and reflections.

This approach is redefining how we think about AI 3D rendering, making it faster, more accessible, and a lot more accurate.

The AI Magic Behind NeRF: How It Works

To understand NeRF technology, it helps to look under the hood a bit.

When you give NeRF multiple images of a scene, along with the camera angles they were taken from, it uses a neural network to predict how light interacts with each point in that scene. Basically, for any point in 3D space, it estimates two things:

- What color that point should appear from a given camera view

- How much light is absorbed or scattered at that point (density)

This process is called volumetric rendering, and it’s powered by a technique known as ray marching. Rays are cast from the camera’s viewpoint into the 3D scene, and NeRF computes how much light each ray gathers as it passes through space. All this data is stitched together to form a full 3D view that looks incredibly real.

The neural network is trained on this data over time, and once trained, it can render the scene from any new angle—even ones it hasn't seen before.

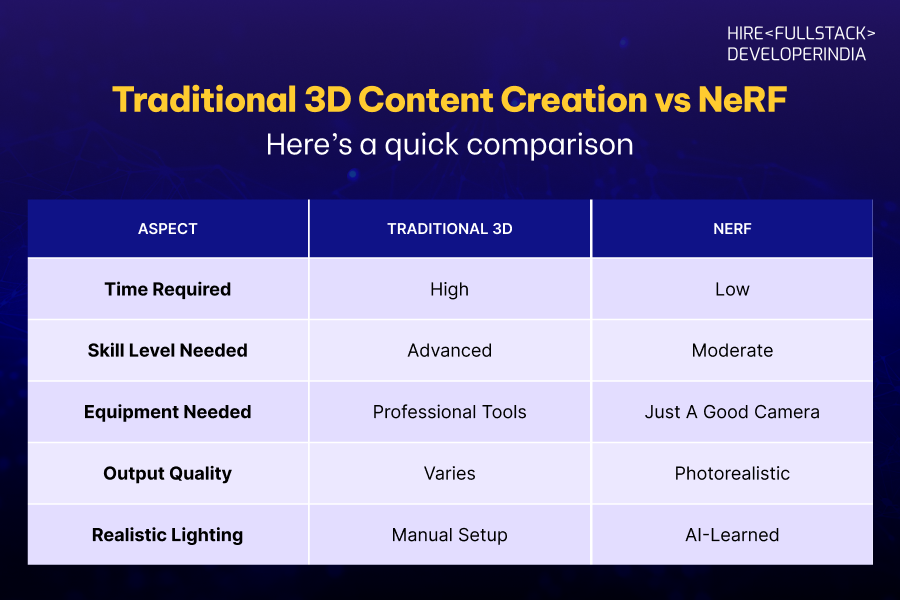

Traditional 3D Content Creation vs NeRF

Creating 3D content traditionally involves complex modeling, texturing, lighting, and rendering workflows. Artists often spend hours or even days working on a single model or scene. These methods also require expensive hardware and a steep learning curve.

In contrast, NeRF technology automates a large part of this process. By feeding in multiple 2D photos, it can quickly generate a high-quality 3D representation of the subject. No sculpting, no texture painting, no manual lighting setups. Just results.

Here’s a quick comparison:

That said, NeRF does have limitations, especially when it comes to editing the generated 3D model. Unlike meshes, NeRF outputs aren’t directly editable in traditional tools. Still, the gains in speed and realism are hard to ignore.

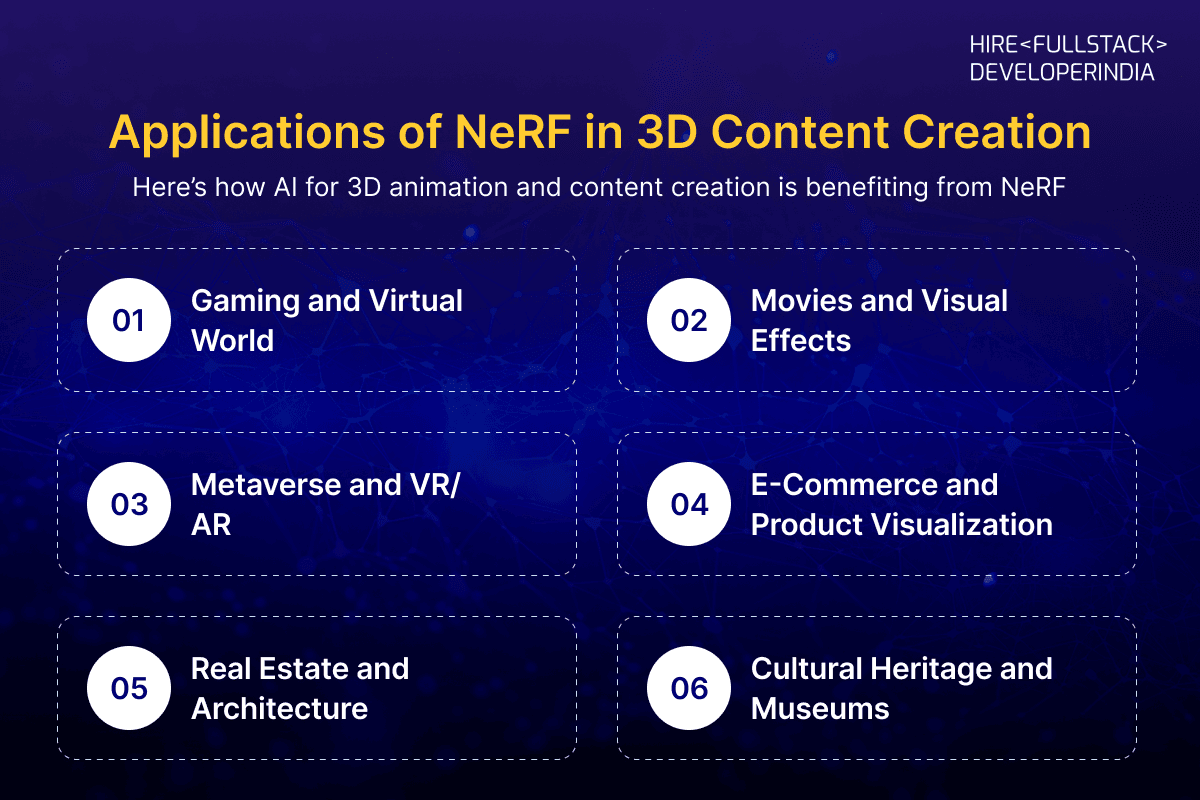

Applications of NeRF in 3D Content Creation

Neural Radiance Fields are already making an impact across several industries. Here’s how AI for 3D animation and content creation is benefiting from NeRF:

1. Gaming and Virtual Worlds

NeRF is used to create game environments with realistic textures and lighting by simply scanning real-world locations. Developers can generate immersive 3D levels much faster than traditional methods allow.

2. Movies and Visual Effects

Film studios use AI 3D rendering to create backgrounds, props, or entire environments. NeRF can help recreate real locations with incredible realism, perfect for virtual production.

3. Metaverse and VR/AR

For virtual reality and augmented reality apps, NeRF helps generate lifelike spaces users can explore. Real-world objects and scenes are quickly digitized, offering immersive experiences.

4. E-Commerce and Product Visualization

Imagine being able to view any product in full 3D with accurate lighting—just from a few photos. NeRF allows retailers to create interactive product displays that feel real and responsive.

5. Real Estate and Architecture

Virtual tours of properties become much easier when you can capture a space in 3D from simple smartphone photos. Architects can also visualize early designs with natural lighting and realistic textures.

6. Cultural Heritage and Museums

Historical artifacts, monuments, and even archaeological sites can be preserved digitally using NeRF. This makes them accessible for education and research around the world.

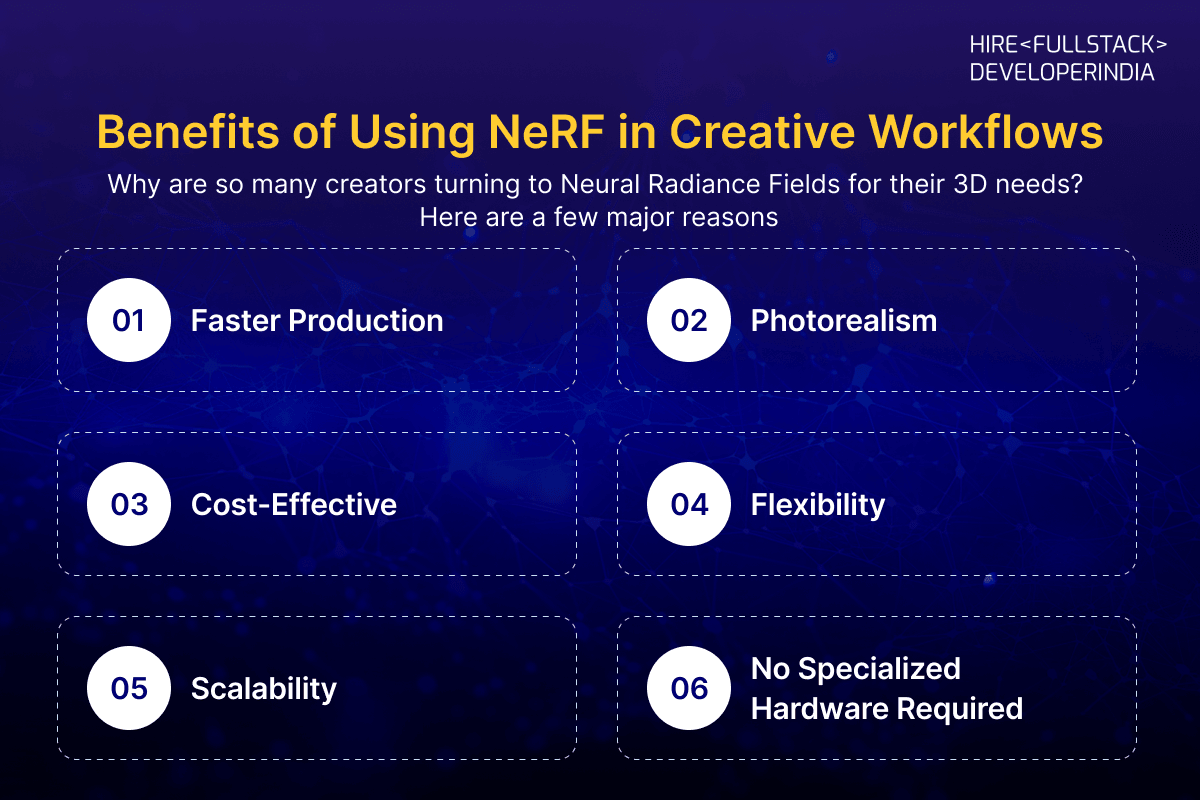

Benefits of Using NeRF in Creative Workflows

Why are so many creators turning to Neural Radiance Fields for their 3D needs? Here are a few major reasons:

- Faster Production: What used to take weeks can now be done in hours.

- Photorealism: Lighting, shadows, and reflections are almost indistinguishable from reality.

- Cost-Effective: No need for LiDAR scanners or motion-capture rigs.

- Flexibility: Scenes can be viewed from any angle—even ones not originally captured.

- Scalability: Perfect for building large 3D environments quickly.

- No Specialized Hardware Required: A decent GPU and a good camera are enough to get started.

By leveraging AI for 3D animation, NeRF empowers creators to spend less time on repetitive tasks and more time on storytelling and design.

Limitations and Current Challenges of NeRF

- As promising as NeRF is, it’s not perfect.

- Training Time: Older versions could take hours to train. However, newer models like Instant-NGP are solving this.

- High Computational Load: Rendering high-resolution 3D views still demands GPU power.

Static Scenes Only: Most NeRF implementations work only for still scenes. Capturing motion is still being researched. - Hard to Edit: Since NeRFs aren’t mesh-based, they’re not easy to edit in tools like Unity or Unreal.

- Image Requirements: The more angles and better quality your photos, the better the result. Poor data equals poor reconstructions.

Despite these drawbacks, the pace of innovation in this space is impressive, and many of these problems are being addressed rapidly.

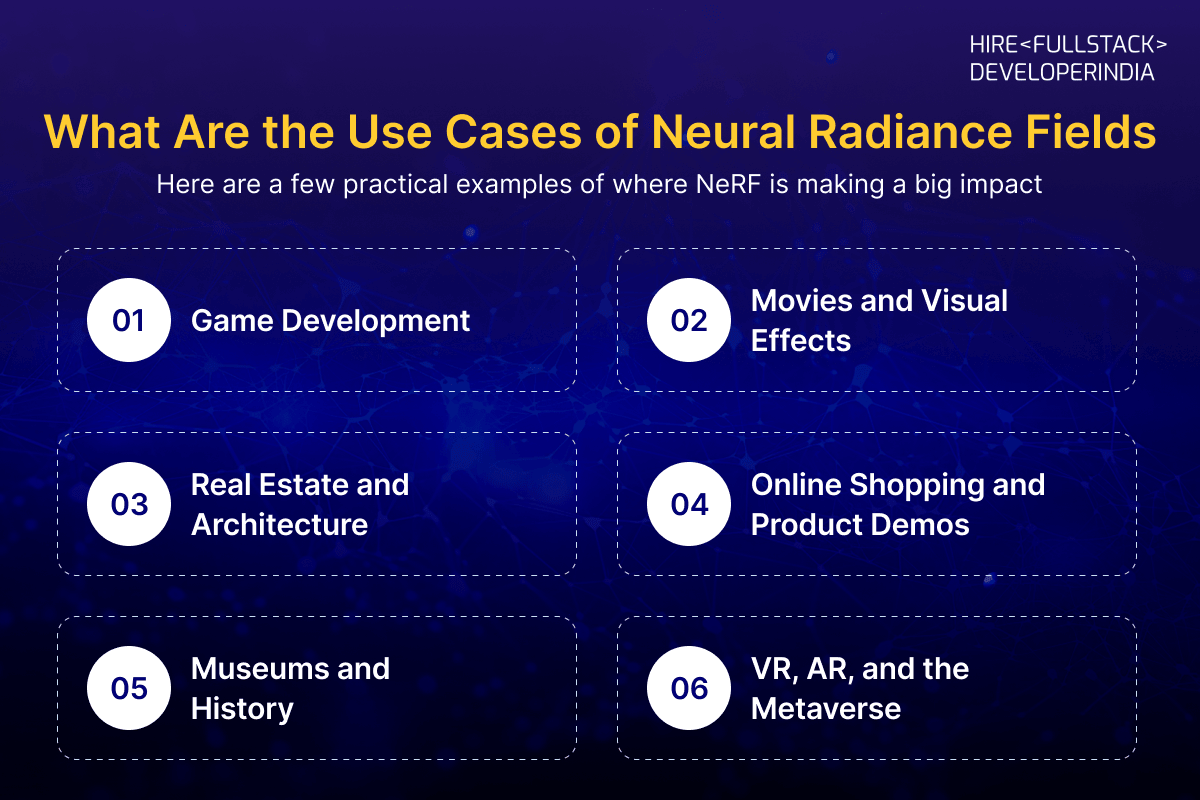

What Are the Use Cases of Neural Radiance Fields?

Neural Radiance Fields (NeRF) aren’t just a cool tech trick—they're actually being used in some really exciting ways across different industries. Here are a few practical examples of where NeRF is making a big impact:

- Game Development

Game designers are using NeRF to quickly turn real-world places into detailed 3D game environments. It saves a lot of time and effort when building realistic game levels. - Movies and Visual Effects

Film studios can use NeRF to recreate real locations as digital sets. This means they don’t always have to shoot on location, and they get more creative freedom in post-production. - Real Estate and Architecture

Agents and architects can take simple photos of a house or building and turn them into a full 3D tour. It's perfect for virtual walkthroughs and showing off designs before anything is built. - Online Shopping and Product Demos

Retailers are starting to use NeRF to create 3D views of their products. Shoppers can look at an item from every angle—just like they would in a real store. - Museums and History

NeRF helps capture and preserve historical places and objects in 3D. Museums can use it for virtual tours or to share exhibits with people all over the world. - VR, AR, and the Metaverse

If you're building virtual reality worlds or AR experiences, NeRF is great for creating realistic spaces that feel like the real thing. It’s already being tested for metaverse environments.

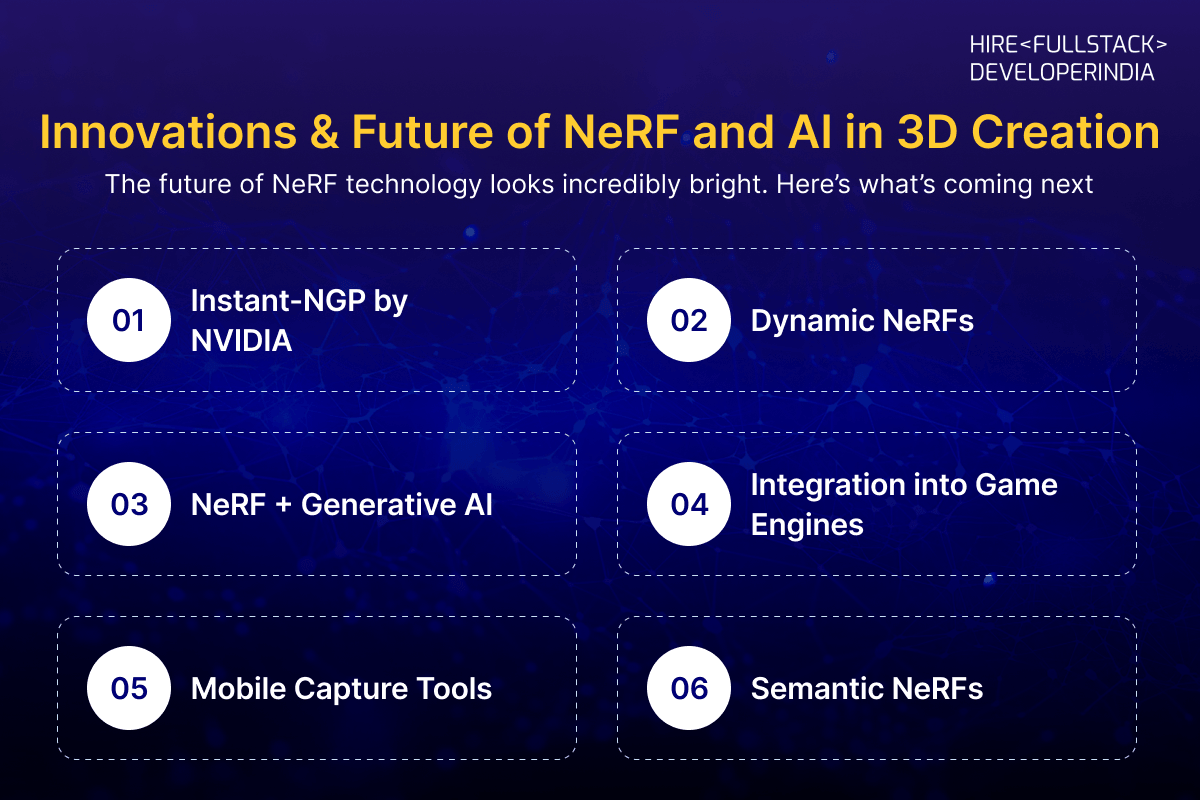

Innovations & Future of NeRF and AI in 3D Creation

The future of NeRF technology looks incredibly bright. Here’s what’s coming next:

- Instant-NGP by NVIDIA: This speeds up NeRF training from hours to seconds.

- Dynamic NeRFs: These models can handle motion—like people walking or leaves blowing in the wind.

- NeRF + Generative AI: Tools like DreamFusion by Google are combining NeRFs with AI imagination, creating 3D models from text prompts.

- Integration into Game Engines: Work is being done to convert NeRFs into mesh models that can be imported into engines like Unity or Unreal.

- Mobile Capture Tools: Apps like Luma AI and Polycam let you generate NeRFs using your smartphone.

- Semantic NeRFs: These combine object recognition and AI to create smarter, editable 3D models.

As these tools become more user-friendly, we’ll likely see an explosion of AI-generated 3D content across the web, games, and virtual spaces.

How to Get Started with NeRF for 3D Content

Curious to try it out for yourself? Here’s how:

Tools You Can Try

- Luma AI (iOS/Android): Point your phone camera, take a few shots, and get a 3D model.

- Instant-NGP (NVIDIA): Open-source project for developers wanting more control.

- Nerfstudio, DreamFusion, Plenoxels: Great for researchers or advanced users.

What You Need

- A decent camera (even a smartphone will do).

- A PC or laptop with a GPU (NVIDIA preferred).

- Some patience and a bit of practice!

Tips for Better Results

- Take clear images from multiple angles.

- Keep lighting consistent.

- Avoid reflective surfaces if possible.

- Use clean, uncluttered backgrounds for object scans.

Conclusion

Neural Radiance Fields are changing how we think about 3D content creation. By using AI to simplify and speed up the entire process, NeRF technology is opening the doors for more creators—from solo artists to big studios—to build beautiful, immersive 3D scenes faster than ever before.

Whether you’re in gaming, animation, virtual tours, or digital art, the impact of AI 3D rendering and NeRF is undeniable. And with ongoing innovation, this is just the beginning.

If you’re excited about the future of 3D, now’s the perfect time to explore how Neural Radiance Fields can transform your workflow.

.png?w=1920&q=75)